At SaaStr AI Day, Mike Tamir, Head of AI at Shopify, and Rudina Seseri, founder and Managing Partner at Glasswing Ventures, level-set about where we are in the cycle for Enterprises adopting AI and the critical work being done at Shopify to leverage AI and solve real problems.

Mike and Rudina do a great job of explaining the complex AI terminology for everyday non-technical founders and leaders to understand and apply to their businesses. Today, many initiatives in AI are mostly superficial, with limited impact on a dollar basis. At Glasswing Ventures, they tell their AI-native companies not to lead with AI because it’s such a superficial indication of interest.

On an adoption basis, there have been some “green shoots,” particularly with Klaviyo and the impact they’ve had bringing AI from a project siloed or customer interface level and into their core business where they’ve automated 700 humans and over two-thirds of their customer success. Why is Klaviyo noteworthy? Because they’ve contributed $40M to their bottom line by bringing AI to their core product and business, and they’re one of the few companies who have.

The Enterprise Mindset Around AI and Where We’re Headed

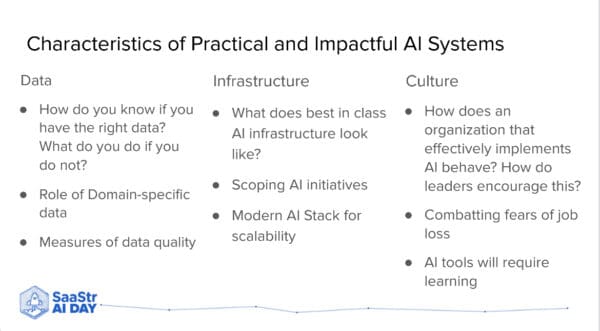

There’s extra work to be done around building the infrastructure to leverage AI for the core business. Rudina sees it along three dimensions:

- Data

- Infrastructure

- A culture of adopting AI

Where are we heading as we try to adopt AI in the Enterprise?

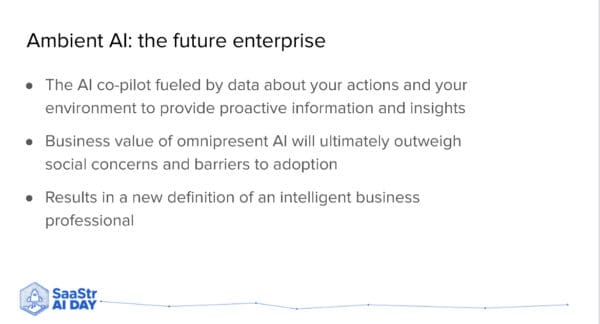

The future of Enterprise is “Ambient AI.” If the input is you, the business, an individual leader or contributor, your activities, and your environment, then the outputs are insights that you wouldn’t be able to derive on your own.

Ambient AI automates the mundane, low productivity, and repeatable tasks to free you and your team up to be more valuable. People will have one leg up if they’re leveraging intelligent tools.

The layout and framework of data, infrastructure, and culture will be even more important as AI shifts to Enterprise.

Data is the Biggest Bottleneck for Founders

Data is often the messiest and most time-consuming to deal with. Many people think we have data, so why not build the models? When in reality, most of the time you spend is on gathering and cleaning the data and making sure it’s the right quality.

You train the models, and it’s an iterative process that requires the right measures and the right data. When you train a model, it’s trial and error. You give the model information and what you’re trying to predict. Then, it takes that information and makes a prediction. From there, you have to see how far it missed, take that error, use some math, and try to adjust all the little parameters and calculations it makes along the way.

That’s why data matters. If you don’t have the right data, you can’t train a model well.

Why is data so hard?

There are two parts to the challenges data poses.

- Culture

- Structure

You want a culture of checking results and having metrics to evaluate those results from the LLM or a more traditional model. You want a culture that focuses on your metrics and evaluating what’s important to you.

When you take the data, you may have a business goal of making more sales or delighting the customer. Whatever the metric is, you have to translate that into a concrete metric.

This will be some type of math function saying, how bad did my prediction miss? Figuring out that answer is complicated.

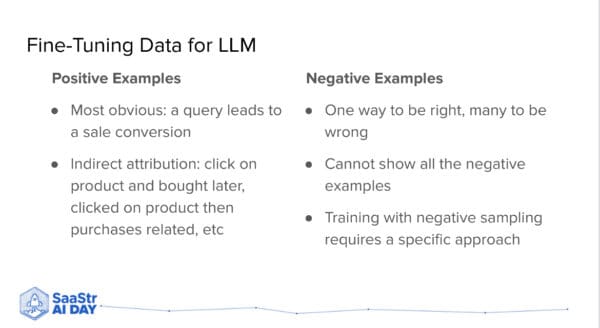

The structure is also important. If you give the model a bunch of examples of inputs and outputs to expect or not expect, the model will learn that specific pattern. So, if you have bad or dirty examples where a negative case isn’t as informative as an almost positive one, you’ll struggle.

Having clean data is the lifeblood of how the model works and, ultimately, how the product is successful.

Incumbents vs. Open Source

On the infrastructure side, without getting too technical, you can only affect the decisions being made at the infrastructure level so much. What should founders know about the modern AI stack that Enterprises can scale on?

It’s dependent on the use case and context because every Enterprise will have different needs and applications when it comes to doing more advanced training. They’ll need GPUs. If it is a small startup, it may just be a single GPU, or it’s bigger with a cluster of GPUs where you need infrastructure management.

In the last several years, there has been a shortage of GPUs. Historically, Cloud platforms like AWS and Azure help with the sporadic needs of renting a GPU for a few hours for training vs. long-term use, which would cost thousands of dollars.

Being able to size up what kind of training you need and making choices based on that answer will determine the stack Enterprises can scale on.

Enterprises value variable fixed costs and fast ramp times. As we move into a world of AI-nativeness and leveraging it, you have to decide what your highest pain point is right now.

If someone doesn’t want to switch from AWS because AWS has partnerships with OpenAI, they have tradeoffs. Using a first-party solution with OpenAI or Anthropic will have different performance and latency, so you may get the simplicity of not onboarding different support. Still, there will be an impact in terms of performance.

Today vs. 10 Years Ago: What Do You Need from a Culture Perspective

One of the beautiful but double-edged things happening today is the ease of libraries. In the olden days of 10 years ago, if you wanted to put together a model, you would have to put it up layer by layer and design it yourself.

For the most part, now, you get a pre-trained model that someone else trained, and you’ll tweak its decisions to your particular data. The days of coding up your own classification model or having ML do that by hand are over.

So, you want to enable your engineers to leverage these use cases by having the right metrics, making sure data is clean, and not overfitting, including for models that take care of themselves. Make sure you have rigorous metric supervision and evaluation of how your models are doing.

Having that strong culture to back the model’s performance before you go into release is probably one of the biggest issues regarding having a strong ML culture.

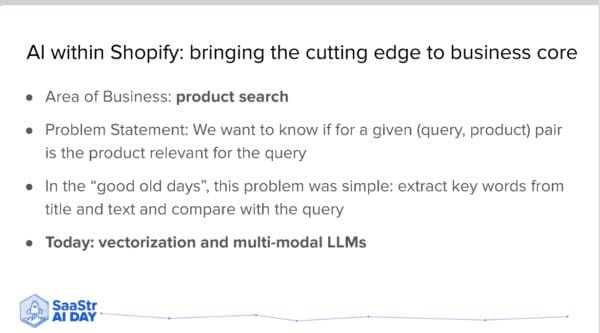

Shopify Initiatives: Search Relevance is the Most Important

Shopify is one of the largest e-commerce platforms in North America. That means ensuring they can answer the fundamental question of if a buyer has a query and is searching, which products would match that query?

That becomes relevant for particular merchants using Shopify who want to shop within that shop. There’s also the option to shop across multiple products and providers, so search relevance is, first and foremost, very important.

High quality is also important. If you search for boots and end up with boot socks, ski boots, and non-relevant things, you need to be able to evaluate that and iterate to be able to give the buyer the right results.

To do that, you need an evaluation method, and you need to care about your metrics.

Two Potential Metrics for a Company Like Shopify

Not all metrics are created equally across the business. What’s a good enough metric for searching for an item on Shopify? If a customer gets the item 50% of the time, that might be great in one context but utterly insufficient in another.

Shopify approaches this in two dimensions.

- Relevance

- Cross-selling

One person might be looking for a product and want to see that one thing. Another might be shopping for boots, and they want socks and some cleaner to match and keep them nice.

These can be two different metrics. Maybe one metric shows direct relevance, and another shows complementary relevance. It’s a product decision of how much you want to balance these. And there’s an interdependency between your teams and whoever is responsible for the bottom line.

What about ROI? How much do you have to put in, and what does the return look like?

You need to invest in experimentation frameworks, and you have to balance that with the numbers. You also have to keep close communication with your stakeholders, which is the cultural aspect of it.

Having ML scientists and engineers working in a vacuum, delivering a model, and going off to work on the next fun technical thing is a mistake. You need a good mix of engineering, ML scientists, and product people in the trenches with your ML builders.

This helps them understand the pros and cons of not just making a tweak in a parameter but also that key value of determining if your end goal and product metrics match the metrics your model is trying to learn from. That will make a huge difference in what you see when you get bottom-line results.

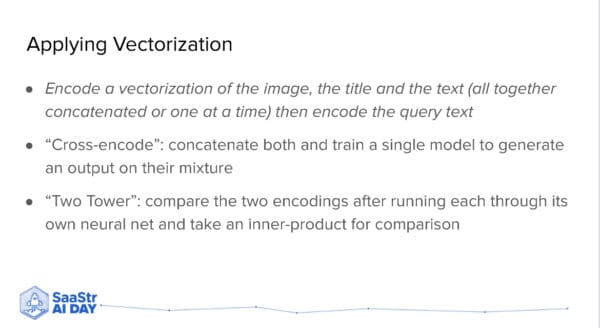

Vectorizations Are The Backbone of GenAI

Vectorization in plain English is text-to-text or text-to-image. In the good ol’ days, it was a keyword search. You just searched one word, not plain-speak sentences, to Google to find a match in products.

For vectorization, it’s a complex topic that can be boiled down to a simple image. In a multi-dimensional space, every word in its context and every image in its context can be represented as a point in that space.

If you’re doing vectorization correctly, then similar words from similar contexts or images will end up located in that similar part of the space.

All your cat pictures end up with the cats, and all your dog pictures are close to them but not in the same place. All the fireworks images will be far away.

Context is huge. What if you say bank? “The robbers were leaving the bank and crashed into a river bank.” Those are very different uses of the word bank. Modern techniques in vectorization have done an excellent job of looking at context to figure out what you’re talking about.

This scenario is about 80% of the backbone of GenAI and why we can interact with them in plain English and deliver embeddings, i.e., vectorizations – embedding the word or image in a vector space.

Scale Easier with “Distillation”

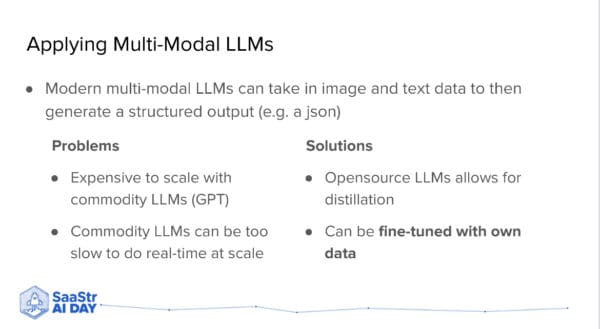

If you pay a commodity LLM, which usually beats open-source LLMs, you’ll pay per token, which can’t be scaled for every query or use case because it’s cost-prohibitive and very slow. What do you do instead?

You might want an open-source model and do what’s called distillation. You have answers in a big model, and then you take a small model and train it to mimic the big model.

This is different from fine-tuning. Fine-tuning is any size model with the parameters trained on a lot of data by someone else. The data might be close to yours, but it isn’t the same.

To fine-tune, you take your specific data and adapt it to your particular domain. LLMs are remarkably great at grammar and understanding subtleties of language. A lot of baseline things have already been done effectively, so you don’t need another OpenAI or Anthropic.

But for your specific data, you want it to be extra good at those nuances. That’s fine-tuning.

In terms of your tech stack, you do LLM and then a “small” language model that’s separate from fine-tuning. This sort of sits on top of it, and you fine-tune your smaller model, at least in comparison to the bigger one, to mimic the performance of the large commodity model.