If you work on anything related to Artificial Intelligence, you know we’re in the age of language models. But when it comes to Enterprises specifically, language models can change the way we work, and they have very big issues. At SaaStr AI Day, Contextual AI’s CEO, Douwe Kiela, deep dives into what it takes to build AI products for the Enterprise.

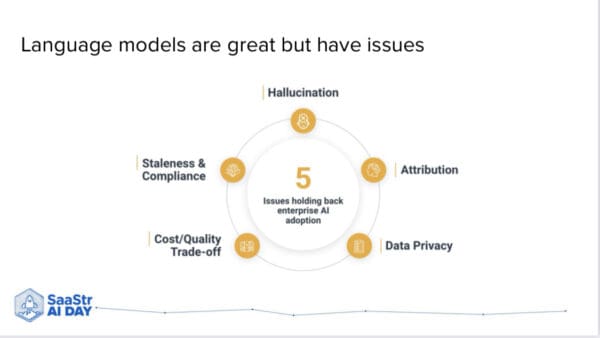

You might be familiar with some of the common language model issues.

-

- Hallucination with very high confidence — making up stuff that isn’t true but seems true.

- Attribution — knowing why these language models are saying what they’re saying.

- Data privacy — we send valuable data to someone else’s data center.

- Staleness and compliance — language models must be up-to-date, compliant with regulations, and able to be revised on the fly without having to retrain the entire thing every time.

The big issue for Enterprises: cost-quality tradeoffs. For many Enterprises, it’s not just about the quality of the language model. It’s also about the price point and whether it makes sense financially.

The first half of this article is a more academic look at the best approach for building AI products for the Enterprise. The second half focuses on what Enterprises care about relating to these solutions.

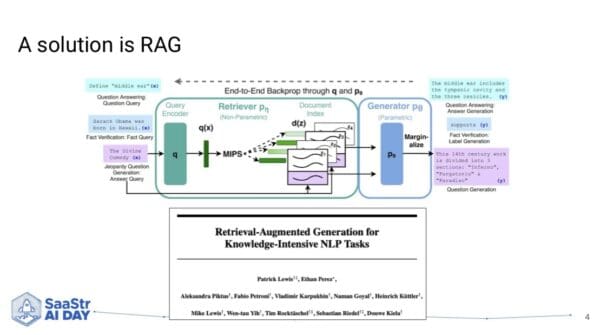

RAG is One Solution to These Problems

Douwe was part of the original work for Retrieval Augmented Generation (RAG) during his time at Facebook AI Research. The basic idea of RAG is simple. It’s saying, “If we have a generator, i.e., a language model, we can enrich that language model with additional data.”

We want that language model to be able to operate off of different versions of, say, Wikipedia or an internal knowledge base. So, how do we give that data to the language model? Through a retrieval mechanism. We’re augmenting the generation with retrieval. A key insight of this paper is at the top — end-to-end backprop. The retriever was learning at the same time as the generator. Fast forward from 2020 to now, and you can see RAG everywhere. But the way people do RAG is wrong.

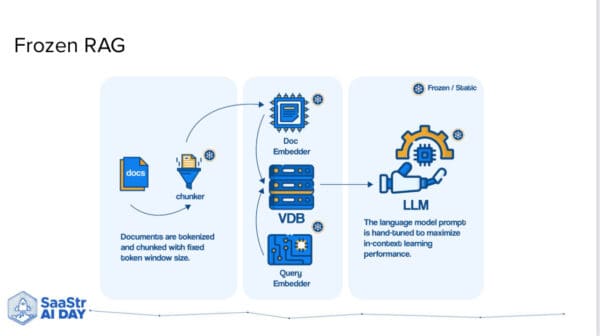

Douwe is one of the few who can say this since he was on the original paper introducing the idea. What people do is have a kind of chunking mechanism that turns documents into little pieces, chunking them up and encoding all of that information using embedding models. Then, they put it in a vector database and do an approximate nearest neighbor search. All of these parts are completely frozen. There’s no Machine Learning happening here, just off-the-shelf components.

At Contextual AI, they refer to this architecture as Frankenstein’s monster, a cobbled-together embedding model, vector database, and language model. But because there is no Machine Learning, you have a very clear ceiling you can’t go beyond.

Frankenstein is great for building quick demos but not great if you want to build something for production in a real Enterprise setting.

iPhone Approach vs. Frankenstein Approach

Many people in the field think you could try to build something more like an iPhone of RAG, where all the parts are made to work together. Everything is jointly optimized as one big system where you solve a problem for a very specific use case and do so exceptionally well.

That’s what Enterprises would like to see. This is the iPhone vs. Frankenstein approach. RAG 1.0 is the old way of doing it, vs. 2.0, the new and better way.

Think of the RAG system as a brain split into two halves. The left brain retrieves and encodes the information before giving it to the right half of the brain, which is the language model. In the Frankenstein setup, the two halves of the brain don’t know they’re working together. They’re entirely separate.

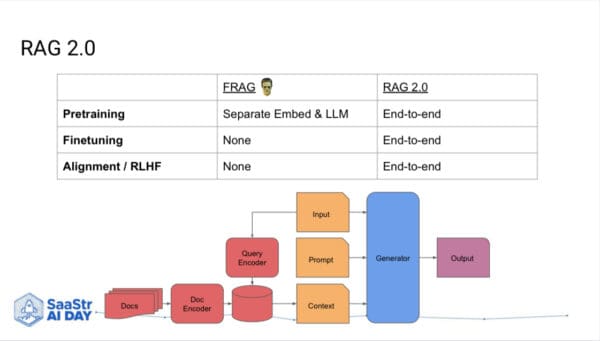

If you know you’ll do RAG, you should have these two halves of the brain be aware of each other and grow up together. Pre-train them together so that from the beginning, they learn to work together very effectively.

That would be RAG 2.0, a very specialized, powerful solution. With RAG 2.0, you train the entire system in three stages.

- Pre-train

- Fine-tune

- RLHF — reinforcement learning from human feedback

What Happens When You Take a Systems Approach?

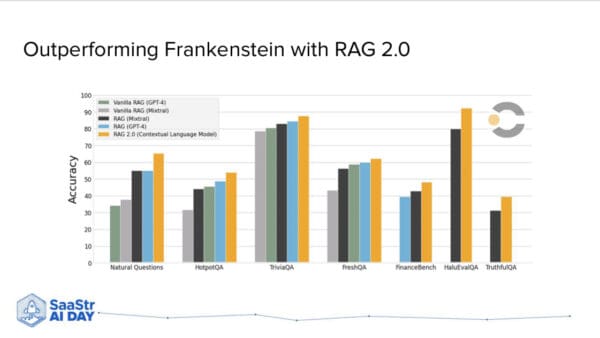

You get a system that performs much better than a Frankenstein setup. Douwe believes this is the future of Enterprise solutions.

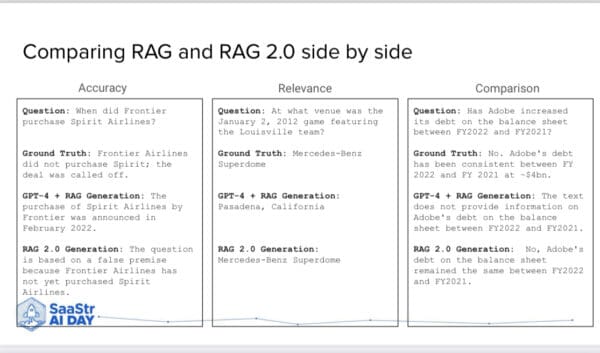

Let’s look at an example in a side-by-side comparison of Frankenstein vs. RAG 2.0. You ask the language model, “When did Frontier purchase Spirit Airlines?” This didn’t happen because the deal was called off.

If you give this to a Frankenstein system, it’ll make up this random hallucination about it being purchased, which isn’t true. You get a lot of mistakes with relevance, too. All language models are pretty good, but what matters is how you contextualize the language model. If you give it the wrong context, there’s no way it will get it right.

In this comparison example, you also want to be able to retrieve different facts simultaneously. These are the more sophisticated things we’ll be able to do with this technology in the future.

Should You Have Long Context Windows?

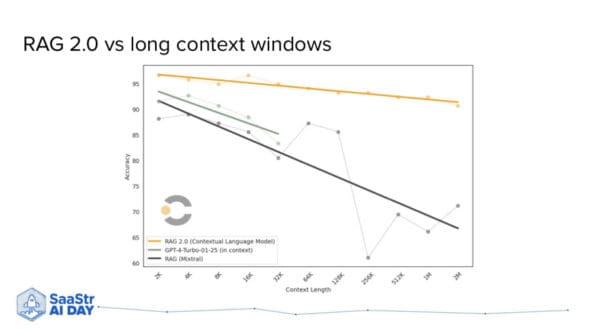

One question Douwe often gets regarding RAG is long context windows. When Google announced Gemini 1.5, many people declared the death of RAG because you don’t need retrieval if you can fit everything in the context window. That’s definitely something you can do, but it’s very expensive and inefficient.

For example, say you put an entire Harry Potter book in the context window of a language model and then asked a simple question like, “What’s the name of Harry’s owl?” You don’t have to read the entire book to find the answer to a simple question. In RAG, you search for “owl” and find relevant information. It’s much more efficient.

What Enterprises Care About

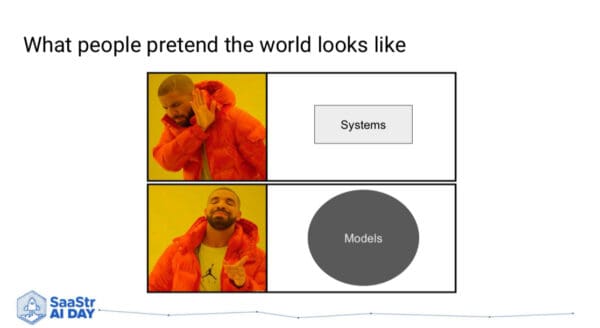

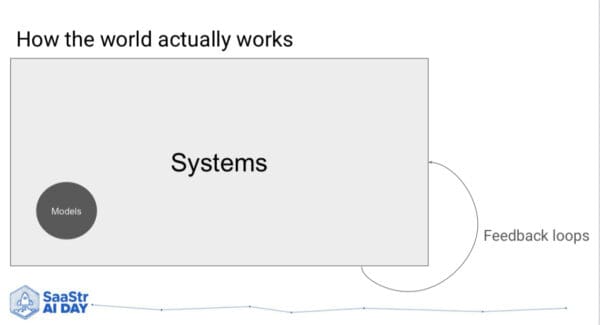

SaaStr AI Day is about Enterprises and how things make it into the wild. Exciting things are happening in Enterprise around this technology, and they’re starting to adopt it. 2023 was the Year of the Demo, and 2024 is the year of production deployments of AI in Enterprise. People pretend the world is such that models are the most exciting things and that systems don’t really matter.

If you’re an AI practitioner and work in big Enterprise, you know how the world really works. It’s mostly about systems in Machine Learning and little about the model. The model is about 10-20% of the system when you want to solve a real problem in the world.

The other thing that matters is the feedback loop, making sure the system with this model can be optimized, get better over time, and solve your problem.

Efficiency Matters a Lot for Enterprise

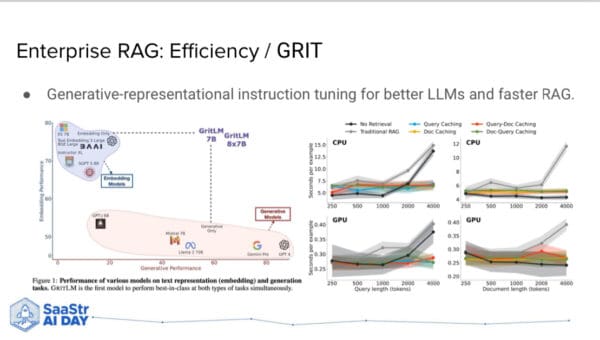

While academics might not care much about efficiency, Enterprises sure do. Contextual AI has work called GRIT, Generative Representational Instruction Tuning, where they show you that you can have a single language model that’s best-in-class at representation and generation. The same model can be good at both.

Another thing that Enterprises care a lot about is around multi-modality. This means you can make language models that are enhanced to see, where you take computer vision models that turn images into text, and you feed that text directly into your RAG system or language model. This endows it with multi-modal capabilities without needing to do anything really fancy.

Contextual AI’s Enterprise Observations

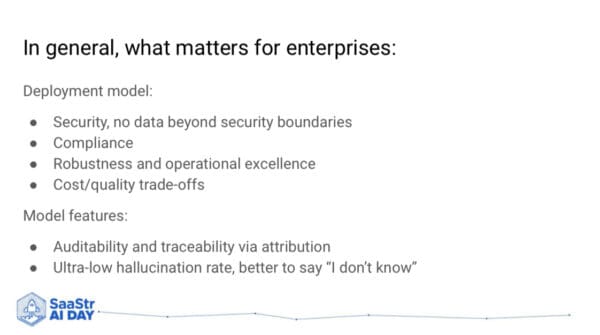

- They care about the quality of the technology, but sometimes they care more about the deployment model. Security really is the most important question because companies don’t like when data has to go beyond their own security boundaries (and for good reason!).

- No single Enterprise deployment is only about accuracy. It’s always about more than that with things like latency, speed, inference, compliance, deployment model, etc. Model features beyond accuracy also add something, like auditability and traceability through attribution, and a model saying “I don’t know” instead of making something up randomly and risking the business.

Enterprise Problems Are Very Different From Consumer Problems

If we look towards where we’re going, there’s a lot of excitement about AGI. It’s a gateway to the rest of the world learning about AI. But if you look at Enterprise problems, they’re very different from consumer problems.

AGI is for the consumer market, where you don’t know what consumers want.

In Enterprise, they usually know exactly what they want. It’s a very constrained problem and a very specific workflow. Enterprise workflows are a specialized thing where you want to do Artificial Specialized Intelligence and not necessarily AGI.

For example, if you have a system that has to give financial product recommendations or antibody recommendations or help with experimental design, it doesn’t need to know about Shakespeare or quantum mechanics.

Specialization gives you better performance and cost-quality tradeoffs, and that’s what Enterprises care about.

Key Takeaways

Douwe has two simple observations.

- It’s about systems, and models are 10-20% of those systems. We should all be thinking about this from a systems perspective if we want to start productionizing AI in Enterprises.

- Specialization is the key to having the optimal tradeoff between cost and quality.

What This Means for Enterprises and AI Practitioners

- Data is the only moat. This is true now and will be more true in the future. If you’re an Enterprise, you have really valuable data, and you want to be careful with where you send that data because, in the long term, compute will get commoditized. Algorithms aren’t that interesting anymore, and data will be the main differentiator for all of us.

- Be much more pragmatic, and don’t leave performance on the table. If you’re pragmatic in choosing systems and don’t follow the hype cycles or have the model with the maximum number of parameters because it sounds cool, you can get much better performance at a much better tradeoff point.

- We are just getting started. Sometimes, it feels like OpenAI is so far ahead or AI is almost solved. But when you’re in the field and seeing how it’s getting deployed, it’s only just starting to happen. These models are relatively easy to build, but building systems is much harder and time-consuming.